How To Run AI Models From Hugging Face

.gif)

You discover a cool model on Hugging Face. Maybe it writes text, recognizes cats in photos, or turns speech into subtitles. You click “Use,” and suddenly the question hits you: how do you actually run it?

Hugging Face makes this easier than it looks. You don’t need to be a machine learning wizard to try a model. You just need to pick the right path. Sometimes that means a one-line pipeline, other times it means dropping down into full control with AutoModel.

If you want zero setup, there’s even an API that does the work for you.

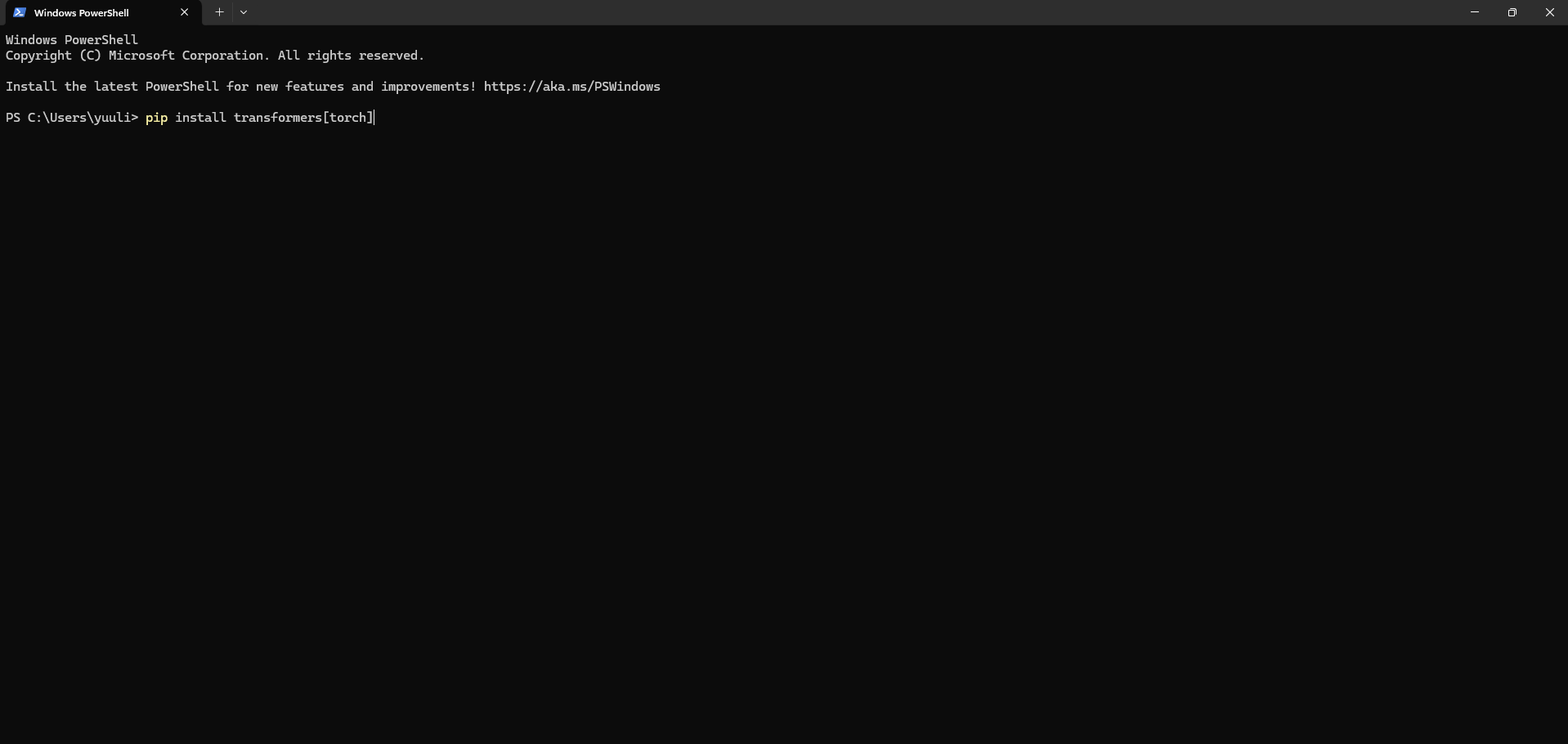

Step 1: Set Up Your Environment

Before you can run anything, you need a working Python setup. The safest move is to create a virtual environment so your dependencies don’t clash with other projects.

Option A: venv (lightweight, built into Python)

python -m venv .env

source .env/bin/activate # Linux/macOS

.env\Scripts\activate # Windows

Option B: conda (heavier but flexible)

conda create -n hf-env python=3.9

conda activate hf-env

Now install Hugging Face libraries. At minimum you need transformers. You also need a backend like PyTorch or TensorFlow.

pip install transformers[torch] # for PyTorch

pip install transformers[tf-cpu] # for TensorFlow

pip install huggingface_hub # for hub interaction

At this point you’re ready to test. A quick sanity check:

python -c "from transformers import pipeline; print(pipeline('sentiment-analysis')('I love Hugging Face!'))"

If you see a prediction, your setup is working.

Step 2: Pick Your Path to Running Models

Hugging Face gives you three main ways to run models.

Option 1: Pipeline API (The Quickest)

The pipeline() function is Hugging Face’s secret sauce for beginners. It handles preprocessing, running the model, and formatting the output in one call.

Example: sentiment analysis

from transformers import pipeline

classifier = pipeline("sentiment-analysis")

print(classifier("This library is brilliant!"))

That’s it. Behind the scenes it downloads a default model, tokenizer, and gives you usable output.

You can also swap in a specific model:

generator = pipeline("text-generation", model="gpt2")

print(generator("Once upon a time", max_length=30))

If you have a GPU, pass device=0 to speed things up.

Option 2: AutoModel and AutoTokenizer (More Control)

When you need to customize preprocessing, tweak performance, or build a real product, drop down a layer.

The Auto* classes let you load any model and tokenizer while keeping the workflow consistent.

from transformers import AutoTokenizer, AutoModelForSequenceClassification

import torch.nn.functional as F

model_id = "distilbert-base-uncased-finetuned-sst-2-english"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForSequenceClassification.from_pretrained(model_id)

inputs = tokenizer("Hugging Face makes AI simple", return_tensors="pt")

outputs = model(**inputs)

probs = F.softmax(outputs.logits, dim=-1)

print(probs)

Here you control every step: tokenization, forward pass, and decoding. That’s essential for production pipelines where you may batch inputs, or fine-tune the model.

Option 3: Inference API (No Local Setup)

If you don’t want to manage Python or GPUs, use Hugging Face’s hosted Inference API. You just send an HTTP request and get back predictions.

cURL example

curl https://api-inference.huggingface.co/models/gpt2 \

-H "Authorization: Bearer YOUR_HF_TOKEN" \

-H "Content-Type: application/json" \

-d '{"inputs": "Hello world"}'

This method is great for web apps, or when your code isn’t in Python.

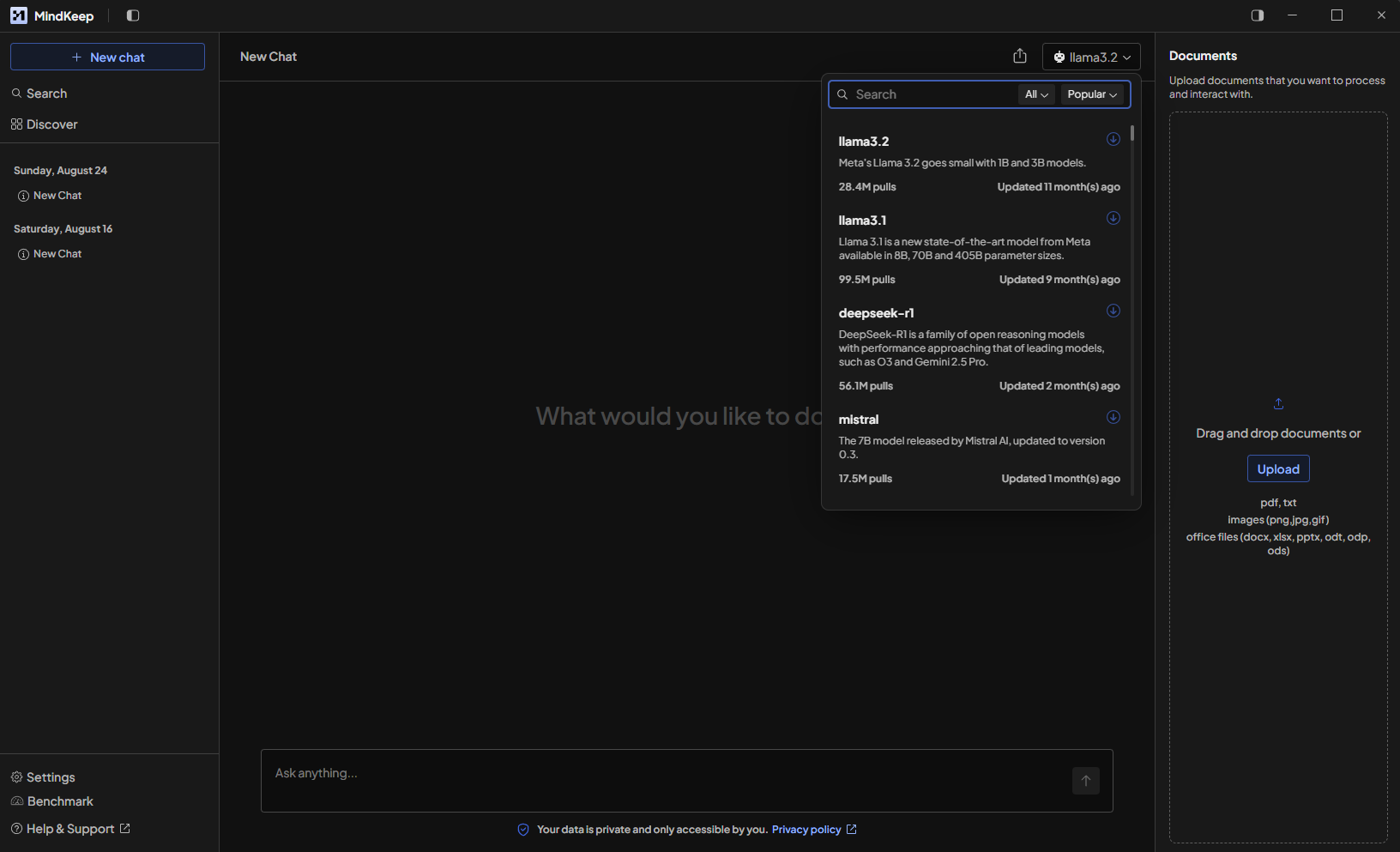

How MindKeep Does It For You

If setting up environments, and picking between pipelines and APIs feels like too much, that’s where MindKeep comes in. It’s your shortcut to Hugging Face without the trial-and-error.

Instead of worrying about whether to use PyTorch or TensorFlow, or how to cache models for offline use, MindKeep can:

- Spin up the right environment in seconds, with all the Hugging Face libraries pre-installed.

- Keep your models organized so you can track which ones you tried, what data you ran them on, and what worked best.

The idea is simple. You focus on the task (“summarize this document,” “classify these reviews”), and MindKeep quietly runs the best path under the hood.

That way you get Hugging Face power without babysitting installs or code.

Conclusion

Running AI models from Hugging Face is less about code length and more about choosing the right path. Use pipeline for quick wins, AutoModel for full control, and the Inference API for zero-setup scalability.

Set up your environment once, decide where you want the model to live, and let Hugging Face handle the messy parts.